Idea

Following represents an attempt to describe a simple data pipeline for basic statistical analysis. The purpose of this guide is to illustrate the possibilities of working with 3rd party data sources both from wrangling and presentational point of view.

The information presented is for demonstration purposes only and not for research or any other purposes. No part of these pages, either texts or images may be used for any purpose other than personal use.

Intro

Quarantine times bring self-isolation and, as a result, a possibility of thinking about things often overlooked overwise. For example exercising statistical data analysis. More precisely, designing a pipeline that gives a possibility of such analysis exist in an automated fashion. For that purpose, we are going to be using COVID spread data to get insights about the past, present and maybe the dynamics of the future growth. We are going to design a pipeline to ingest, analyse and visualize results in a form of the web application. To make it a bit more interesting, we'll add one more constraint. We are going to spent $0.00 for hosting and maintaining this project.

Theory

First, let's get some terminology out of the way.

In computing, a pipeline, also known as a data pipeline, is a set of data processing elements connected in series, where the output of one element is the input of the next one. The elements of a pipeline are often executed in parallel or in time-sliced fashion. (wikipedia)

In other words, data pipeline represents a sequence of actions that move data through stages. First action in a sequence is often called ingestion and deals with downloading data from sources, where last step moves data to the destination for storage and analysis. What happens in between, depends on the design of the pipeline itself and varies from simple movement of data from one source to another (extraction), to ML processing preparations.

Source

Sources of data come in different shapes and sizes. They evolve together with the industry itself. Not so long ago we had just relational databases. Later, it was APIs and webhooks over the HTTP protocol. These days, it's not uncommon to deal with data in a form of a stream. For example:

Twitter data stream consumption developer guide: Consuming streaming data

Destination

Destination is anything that holds the end result of the pipeline. It does not have to be data at rest (database). It could be of exactly the same type as a source or even the source itself (self transformation).

Transformation

Any operation applied to data for the purpose of changing/augmenting its form. Examples might include sorting, filtering, validation, transforming to a different shape.

Ingestion

The ingestion components of a data pipeline are the processes that read data from sources. An extraction process reads from each data source using application programming interfaces (API) provided by the data source. Before you can write code that calls the APIs, though, you have to figure out what data we want to extract through a process called data profiling — examining data for its characteristics and structure, and evaluating how well it fits a business purpose.

Processing

Batch processing is when sets of records are extracted and operated on as a group. Batch processing is sequential, and the ingestion mechanism reads, processes, and outputs groups of records according to criteria set by developers and analysts beforehand. The process does not watch for new records and move them along in real time, but instead runs on a schedule or acts based on external triggers.

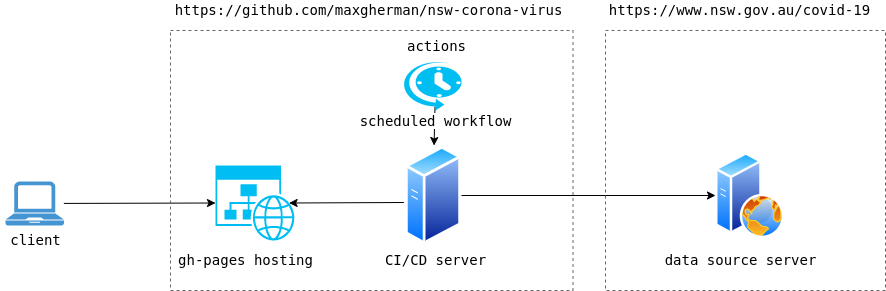

Architecture

That was a mouth full of words, but it helps get everybody on the same page. Now, being more specific about the pipeline itself, we are going to extract data out of the official COVID statistics source (NSW, Australia), transform it to fit our needs of visual and descriptive statistics, and visualize results using a static SPA. As we decided, there well be no money involved. That means no servers. Everything will be hosted using Github static hosting. Ingestion and transformation will be handled by Github actions (free tier).

- Github action is triggered by schedule

- CI/CD agent downloads data from the 3dr party server

- CI/CD agent builds static web site assets

- CI/CD agent pushes downloaded data along with the build artifacts into the

gh-pagesbranch - Client connects to Github static website hosting

References

Data pipeline architecture: Building a path from ingestion to analytics