On November 4th 2019, Azure SDK team published a release containing improved APIs for the blob storage manipulations. Finally, I got some time to try new features regarding existing use cases of dealing with larger files. Following is a step by step project setup for uploading/downloading large files using Azure blob containers.

The use case

The initial requirement is very simple. We are going to create a web application for uploading / storing / downloading large files provided by the user. Since everything is going to be in the cloud, we need to find out some durable storage service capable of holding impressive amounts of unstructured data. We do not really care about what our files are going to represent, so binary low cost storage is the best option.

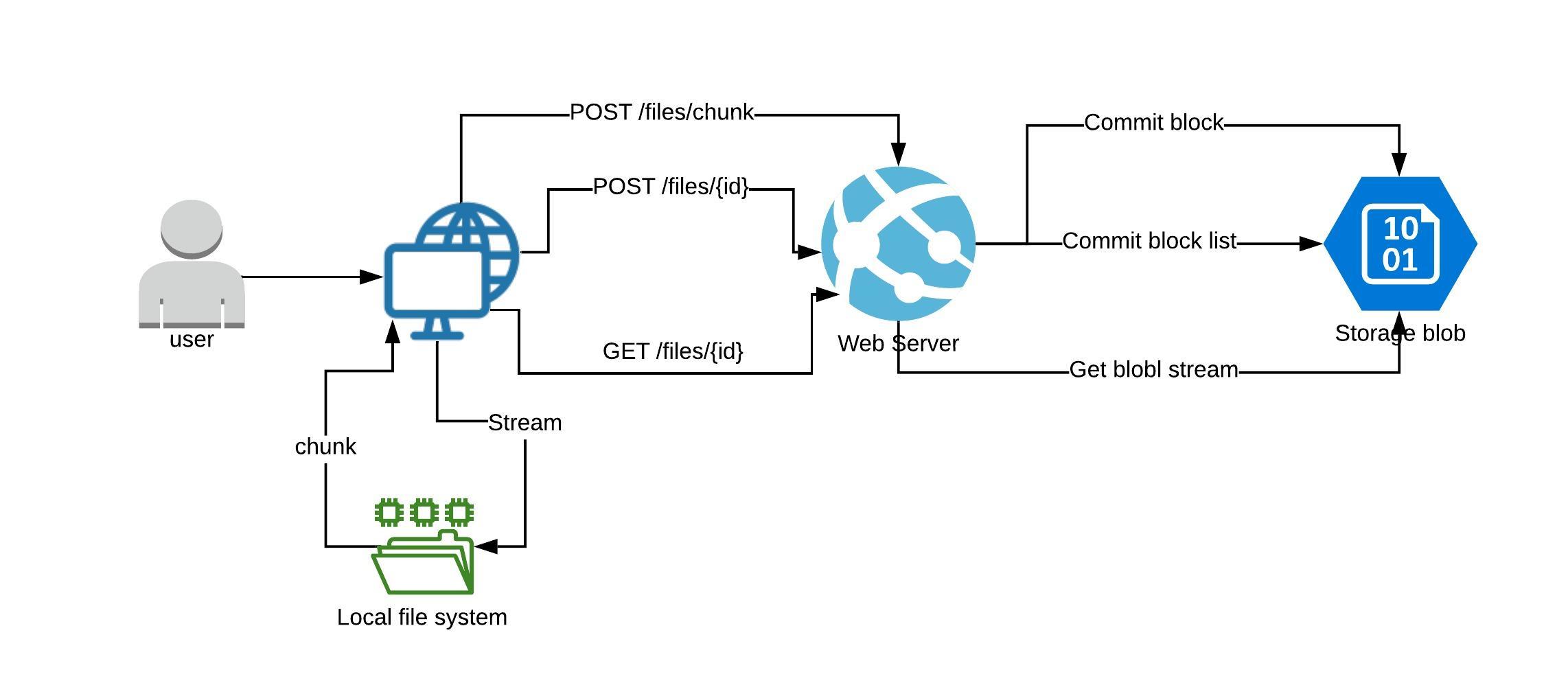

Architecture

When it comes to the web front-end, large files represent a few challenges. Uploading a file in one go in a form of the HTTP request body is not going to work. HTTP servers have their own limitations upon maxim request size (usually specified in bytes) and changing that limit to a bigger number is not recommended for security reasons (at least for publicly facing websites). Downloading is also a problem. Usually, downloading a file from a server side point of view means reading a file into the memory and returning it to the client in one response. Although, most of the web frameworks provide a way to stream a file from the local file system, this approach is forbidden for us as well because of the scalability reasons.

Having all of these limitations in mind, it seems like the natural way of working with files in this context would be splitting them up in chunks. Here is a high level idea. Web browser makes an initial split, file gets transferred by peaces to the web server and from there to the storage service. Upon finalized transfer, all the pieces get "glued" together back into one file at rest. For the download, same idea of chunks could be used. But this time HTTP protocol could be helpful to wrap the transfer in a form of the streaming solution. It is similar to how users start playing a video in a browser with the ability to pause it but for the regular files.

Upload:

- User selects a list of files

- Browser splits every file into chunks

- File chunk is uploaded into the web server memory using POST /files/chunk API

- File chunk is staged into a block blob storage as a block with the associated ID

- Browser makes a final request to finalize file upload using POST /files/{id} API

- List of uncommitted blocks gets committed into a final file inside the blob block container

Download:

- User initiates file download by clicking a download link

- Browser sends a HTTP request to initiate the file download

- Web server invokes blob storage API to get a blob stream entry point

- Web server redirects file blob content streaming to the client

- Web browser saves the file in chunks using HTTP Ranges protocol

Azure Blob storage

Among all Azure services Blob storage is the best offering at our disposal. It ticks all the boxes and provides additional options for later architecture evolution:

"Azure Blob storage is Microsoft's object storage solution for the cloud. Blob storage is optimized for storing massive amounts of unstructured data. Unstructured data is data that doesn't adhere to a particular data model or definition, such as text or binary data."

It is designed to accomplish multiple goals:

- Serving images or documents directly to a browser.

- Storing files for distributed access.

- Streaming video and audio.

- Writing to log files.

- Storing data for backup and restore, disaster recovery, and archiving.

- Storing data for analysis by an on-premises or Azure-hosted service

When working with blobs, we have to choose on of the three options: block blobs, append blobs and page blobs. They are optimized for different scenarios and the one we are interested in is covered by the block blobs. From the docs:

"Azure Storage offers three types of blob storage: Block Blobs, Append Blobs and Page Blobs. Block blobs are composed of blocks and are ideal for storing text or binary files, and for uploading large files efficiently. Append blobs are also made up of blocks, but they are optimized for append operations, making them ideal for logging scenarios. Page blobs are made up of 512-byte pages up to 8 TB in total size and are designed for frequent random read/write operations."

Large files

So what does it mean to upload/download a large file? Unfortunately it does not mean a file of an infinite size. Even Azure block blobs have their limitations, but they are pretty generous:

"Block blobs let you upload large blobs efficiently. Block blobs are comprised of blocks, each of which is identified by a block ID. You create or modify a block blob by writing a set of blocks and committing them by their block IDs. Each block can be a different size, up to a maximum of 100 MB, and a block blob can include up to 50,000 blocks. The maximum size of a block blob is therefore slightly more than 4.75 TB (100 MB X 50,000 blocks)."

And there is one more limitation that I have to mention:

"When you upload a block to a blob in your storage account, it is associated with the specified block blob, but it does not become part of the blob until you commit a list of blocks that includes the new block's ID. New blocks remain in an uncommitted state until they are specifically committed or discarded. There can be a maximum of 100,000 uncommitted blocks."

Client side implementation

For the client side, we are going to go with a simple form containing multi file select input and some default settings about the chunk size and the number of simultaneous files to upload. The form is processed out by two JavaScript files: index.js and file-uploader.js

index.js- all the UI related concernsfile-uploader.js- the upload functionality itself.

index

All the DOM events logic goes here. We hook up all the user interactions to an instance of a file uploader and propagate file upload progress back to the DOM level.

document.querySelector('.octet .btn-upload')

.addEventListener('click', () => {

const input = document.querySelector('.octet .file-input');

input.click();

})

document.querySelector('.octet .file-input')

.addEventListener('change', (e) => {

const chunkSize = document.querySelector('.octet .chunk-size');

const delay = document.querySelector('.octet .delay');

const fileUploadCount = document.querySelector('.octet .file-upload-count')

const fileUploader = FileUploader(

Array.from(e.target.files),

parseInt(chunkSize.value),

parseInt(delay.value),

parseInt(fileUploadCount.value)

);

const results = document.querySelector('.octet .results');

fileUploader.reportProgress((id, name, progress) => {

let parent = document.getElementById(id);

if(!parent) {

parent = document.createElement('div');

parent.id = id;

results.appendChild(parent);

}

if(progress >= 100) {

const link = document.createElement('a');

link.href = `/files/${id}`;

link.innerHTML = name;

parent.innerHTML = '';

parent.appendChild(link);

} else {

const progressBar = Math.floor((23 * progress) / 100);

parent.innerHTML =

`${(new Array(progressBar)).fill('=').join('')}> ${progress} %`;

}

});

fileUploader.upload();

e.target.value = [];

});

const sizeRound = document.querySelector('.octet .size-round');

document.querySelector('.octet .chunk-size')

.addEventListener('keyup', (e) => {

const value = e.target.value;

if(!value) {

sizeRound.innerHTML = '';

return;

}

sizeRound.innerHTML = formatBytes(parseInt(value), 2);

});

// https://stackoverflow.com/questions/15900485/correct-way-to-convert-size-in-bytes-to-kb-mb-gb-in-javascript

function formatBytes(bytes, decimals = 2) {

if (bytes === 0) return '0 Bytes';

const k = 1024;

const dm = decimals < 0 ? 0 : decimals;

const sizes = ['Bytes', 'KB', 'MB', 'GB', 'TB', 'PB', 'EB', 'ZB', 'YB'];

const i = Math.floor(Math.log(bytes) / Math.log(k));

return parseFloat((bytes / Math.pow(k, i)).toFixed(dm)) + ' ' + sizes[i];

}

file-uploader

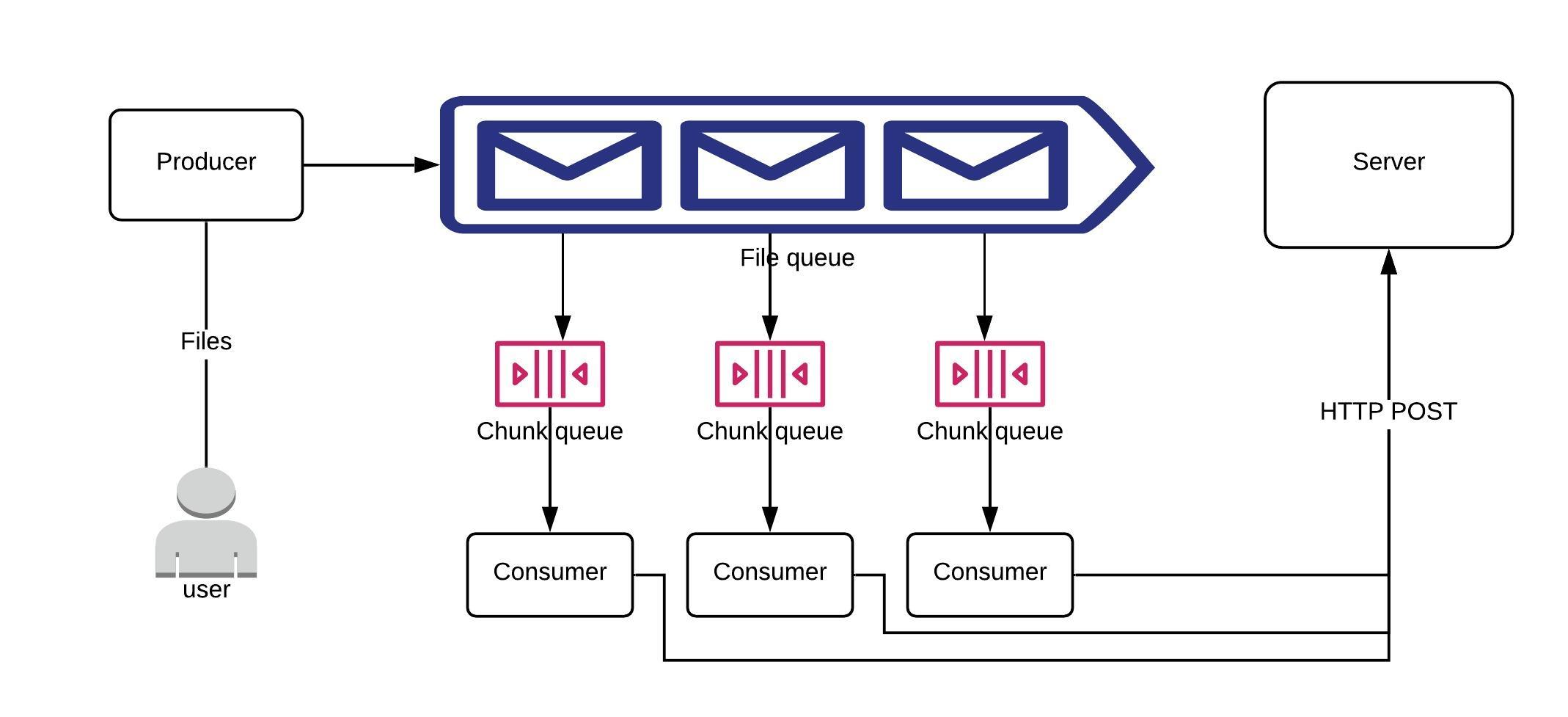

file-uploader.js - represents the core logic of splitting files into chunks and uploading them to the server. Implementation is based on a very simplified idea of a producer - consumer problem.All the files are put into the queue by a producer and a consumer removes them from the queue by availability. Every file itself has a queue of body chunks that are processed one by one in a sequence of HTTP POST requests. Every chunk gets a unique number within a chunk queue that is used later as a unique block id within the final blob. Upon successful submission of all chunks, one more HTTP POST request is made to finalize them within one file in the context of a blob container.

HTTP POST request for the chunk upload could have been implemented in a form of a multi-part form request, but since we are dealing with chunks manually, there is no much reason for that.

fileUploadCountparameter indicates number of consumers working with a file queue simultaneously. In over words, it regulates how may files to be processed at the same time.

/**

*

* @param {Array.<File>} files - array of files

* @param {number} chunkSize - chunk upload size (default 1024)

* @param {number} delay - chunk upload delay (default 0)

* @param {number} fileUploadCount - number files to upload simultaneously

*/

const FileUploader = (

files,

chunkSize = 1024,

delay = 0,

fileUploadCount = 2) => {

const fileQueue = [];

let progressEvent;

const splitChunk = (file, start) => {

const end = file.size;

if(start >= end) {

return {

success: false,

result: null

};

}

const chunkStart = start;

const chunkEnd = Math.min(start + chunkSize, end);

return {

success: true,

chunkEnd,

result: file.slice(chunkStart, chunkEnd)

};

}

const uploadChunk = (fileEntry) => {

if(fileEntry.chunkQueue.length <= 0) {

commitFile(fileEntry);

return;

}

const chunkEntry = fileEntry.chunkQueue.pop();

fetch('/files/chunk', {

method: 'POST',

headers: {

'Content-Type': 'application/octet-stream',

'Content-Length': chunkEntry.data.size,

...(fileEntry.fileId && {'X-Content-Id': fileEntry.fileId}),

'X-Chunk-Id': chunkEntry.index,

},

body: chunkEntry.data

})

.then(response => response.json())

.then(data => {

fileEntry.fileId = data.fileId;

const chunk = splitChunk(

fileEntry.file, chunkEntry.start, fileEntry.size);

if(chunk.success) {

fileEntry.chunkQueue.push({

index: chunkEntry.index + 1,

data: chunk.result,

start: chunk.chunkEnd

})

if(progressEvent) {

const progress = (

(chunkEntry.start / fileEntry.file.size) * 100

).toFixed(2);

progressEvent(

fileEntry.fileId, fileEntry.file.name, progress);

}

}

setTimeout(() => {

uploadChunk(fileEntry);

}, delay)

})

.catch(error => {

console.log(error);

// (Optional): Implement retry chunk upload logic here

// uploadChunk(fileEntry);

});

}

const uploadFile = () => {

const entry = fileQueue.pop();

if(!entry) {

return;

}

const chunk = splitChunk(entry.file, 0);

if(chunk.success) {

entry.chunkQueue.push({

index: 0,

data: chunk.result,

start: chunk.chunkEnd

});

uploadChunk(entry);

}

}

const commitFile = (fileEntry) => {

fetch(`/files/${fileEntry.fileId}`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({

fileName: fileEntry.file.name,

size: fileEntry.file.size

})

})

.then(() => {

progressEvent(fileEntry.fileId, fileEntry.file.name, 100);

launchUpload();

})

.catch(console.log);

}

const launchUpload = () => {

if(fileQueue.length <= 0) {

return;

}

let count = fileUploadCount;

while(count > 0) {

uploadFile();

count--;

}

}

return {

upload: () => {

const entries = files.map(file => ({

file,

chunkQueue: []

}));

fileQueue.push.apply(fileQueue, entries);

launchUpload();

},

reportProgress: (handle) => {

progressEvent = handle;

}

};

}

Server side implementation

Finally, we got to the bottom of the block blob functionality. It consists of four parts: container creation, block upload/staging, block list commit, and blob content streaming.

appsettings

First, we have to put all the configuration together.

{

"BlobStorage": {

"ConnectionString": "",

"ContainerName": "test-container"

}

}

ConnectionString- Azure blob service connection stringContainerName- the name of the container to store files

Program

Next, we create a container during the application startup.

public static async Task Main(string[] args)

{

var webHost = CreateHostBuilder(args).Build();

using (var scope = webHost.Services.CreateScope())

{

var storage = scope.ServiceProvider

.GetRequiredService<IFileStorageService>();

await storage.CreateContainerAsync();

}

await webHost.RunAsync();

}

Container creation logic could be invoked on every interaction with the Blob service (

CreateIfNotExistsAsync). But for the performance reasons it is placed inside theMainmethod for a single time call.

FilesController

Files controller has three methods for uploading chunks, committing a file, and initiating a file download stream.

[HttpPost("chunk")]

public async Task<IActionResult> UploadChunk()

{

var fileId =

Request.Headers.TryGetValue("X-Content-Id", out StringValues contentId) ?

Guid.Parse(contentId) : Guid.NewGuid();

var blockId = long.Parse(Request.Headers["X-Chunk-Id"]);

using(var stream = new MemoryStream())

{

await Request.Body.CopyToAsync(stream);

stream.Position = 0;

await filesStorage.UploadBlockAsync(fileId, blockId, stream);

}

return Ok(new { FileId = fileId });

}

[HttpPost("{id}")]

public async Task<IActionResult> CommitFile(

Guid id,

[FromBody] FileMetadataModel metadata)

{

await filesStorage.CommitBlocksAsync(id, metadata.ToStorage());

return CreatedAtAction(nameof(GetFile), new { id }, null);

}

[HttpGet("{id}")]

public async Task<IActionResult> GetFile(Guid id)

{

var data = await filesStorage.DownloadAsync(id);

return File(

data.Stream,

"application/force-download",

data.Metadata.FileName,

enableRangeProcessing: true);

}

enableRangeProcessingparameter set to true indicates the usages of the HTTP Ranges protocol. This way, client can request a file by pieces while being able to save them to the disk (Streaming).

FileStorageService

File storage service is where all the communication with the Azure happens. It compliments the controller functionality with a few twists.

For the block uploading part, we MD5 hash the content and send it along with the data to enforce upload integrity. But there is also one detail about the block id:

"Block IDs are strings of equal length within a blob. Block client code usually uses base-64 encoding to normalize strings into equal lengths. When using base-64 encoding, the pre-encoded string must be 64 bytes or less. Block ID values can be duplicated in different blobs"

To facilitate this requirement, we convert chunk ids of type long to a string of fixed 20 characters length and later, to base64 string. Feel free to use any different string length, as long as it can handle all the chunk ids.

public async Task UploadBlockAsync(Guid fileId, long blockId, Stream block)

{

var client = CreateBlockClient(fileId);

using (var md5 = MD5.Create())

{

var hash = md5.ComputeHash(block);

block.Position = 0;

await client.StageBlockAsync(

GenerateBlobBlockId(blockId),

block,

hash

);

}

}

private string GenerateBlobBlockId(long blockId)

{

return Convert.ToBase64String(

Encoding.UTF8.GetBytes(

blockId.ToString("d20")

)

);

}

private BlockBlobClient CreateBlockClient(Guid fileId)

{

var serviceClient = new BlobServiceClient(

blobStorageConfig.ConnectionString);

var blobClient = serviceClient.GetBlobContainerClient(

blobStorageConfig.ContainerName);

return new BlockBlobClient(

blobStorageConfig.ConnectionString,

blobStorageConfig.ContainerName,

fileId.ToString());

}

When all the blocks are uploaded, we have to commit them, overwise they disappear in 7 days. We also save file metadata (name, size) together with the content. File metadata will become useful later during the file download.

Committing a list of blocks requires a list of ids of those blocks. But there is no restriction on any order or duplications of ids in the list. This is done on purpose. Multiple blocks can be uploaded at the same time, as well as a file might consist of identical parts, so only one block could be used to upload them. This means that during a block list commit, ids have to be put in exactly same order as the content they represent within the file. To accomplish this, we download the details of all the blocks first, convert their ids back onto long and order them.

public async Task CommitBlocksAsync(Guid fileId, FileMetadata metadata)

{

var client = CreateBlockClient(fileId);

var blockList = await client.GetBlockListAsync();

var blobBlockIds = blockList.Value.UncommittedBlocks

.Select(item => item.Name);

await client.CommitBlockListAsync(

OrderBlobBlockIds(blobBlockIds),

null,

metadata.ToStorageMetadata()

);

}

private IEnumerable<string> OrderBlobBlockIds(IEnumerable<string> blobBlockIds)

{

return blobBlockIds.Select(Convert.FromBase64String)

.Select(Encoding.UTF8.GetString)

.Select(long.Parse)

.OrderBy(_ => _)

.Select(GenerateBlobBlockId)

.ToArray();

}

Streaming is relatively simple. BlobDownloadInfo class implements Blob content as a C# Stream. The only thing that is left is file metadata. We download it within the same method.

public async Task<(Stream Stream, FileMetadata Metadata)> DownloadAsync(

Guid fileId)

{

var client = CreateBlockClient(fileId);

var blob = await client.DownloadAsync();

var properties = await client.GetPropertiesAsync();

return (

Stream: blob.Value.Content,

Metadata: FileMetadata.FromStorageMetadata(properties.Value.Metadata)

);

}

Last but not least, the method used during the startup for the container creation

public async Task CreateContainerAsync()

{

var serviceClient = new BlobServiceClient(

blobStorageConfig.ConnectionString);

var blobClient = serviceClient

.GetBlobContainerClient(blobStorageConfig.ContainerName);

await blobClient.CreateIfNotExistsAsync();

}

Source code

References

Introduction to Azure Blob storage